Implementing dependency injection through Custom Metadata Types is an effective approach for crafting Salesforce code that is easily extensible. Even in cases where the design may not undergo extensions (perhaps due to a client with unchanging requirements), this method proves valuable by promoting the separation of concerns and compelling dependencies to be modularized, aligning well with a package-centric perspective of code organization in Salesforce DX.

Andrew Fawcett has recently developed a versatile library for dependency injection on Salesforce, named Force DI, which he extensively discussed in his blog. At Nebula, we have been employing a similar technique across various scenarios. While working on a new application with dependency injection, I utilized a method for creating object instances with parameters in a generic and elegant manner. Whether I encountered this idea in a StackExchange post or conceived it myself remains uncertain, but regardless, it’s worth sharing.

The Application: Scheduler Manager

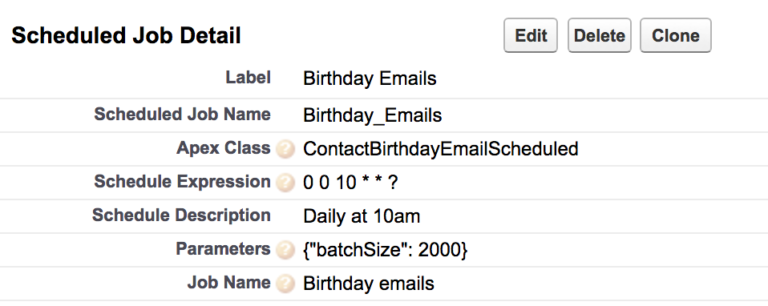

The application functions as a generic Scheduled Job Manager. I find the current scenario for scheduled jobs problematic because once initiated, any parameters used to start them are lost. For instance, a common use case involves initiating a scheduled job to start a batch, including a parameter specifying the batch size. However, when starting the scheduled job from the developer console, the batch size is not stored where it’s easily accessible (unless one customizes a solution for this purpose). The Scheduled Job Manager is powered by custom metadata records, guiding how to initiate the batch—specifying which Schedulable class to execute, the Cron string to use, and the parameters to pass to the class upon creation. This approach effectively addresses the issue of recording scheduled job parameters definitively.

Consider a scenario where we have a scheduled job to dispatch birthday emails to our Contacts. The corresponding scheduled class might resemble the following:

public class ContactBirthdayEmailScheduled implements Schedulable {

private Integer batchSize;

public ContactBirthdayEmailScheduled() {}

public ContactBirthdayEmailScheduled(Integer batchSize) {

this.batchSize = batchSize;

}

public void execute(SchedulableContext sc) {

Database.executeBatch(new ContactBirthdayEmailBatch(), batchSize);

In the absence of the Scheduled Job Manager, initiating this job in production would involve running it through the developer console, for instance.

System.schedule('Send birthday emails', '0 0 10 * * ?', new

ContactBirthdayEmailScheduled(2000));

Subsequently, six months down the line, issues arise as a result of someone introducing numerous Process Builders to Contacts, necessitating a reduction in the batch size. This prompts the question, “What batch size did I input six months ago? How much should I decrease it by now? Is it a matter of luck?” Chances are, the previous batch size isn’t remembered, leading to a guessing game to determine the appropriate adjustment. The Scheduled Job Manager eliminates this uncertainty as the batch size is documented in the custom metadata, providing clarity.

Utilizing Parameters

To set up the Scheduled Job Manager, we are aware that we can instantiate an instance of the Schedulable class using the following method:

Type scheduledClassType = Type.forName(thisJob.Apex_Class__c); Schedulable scheduledInstance = (Schedulable)scheduledClassType.newInstance();

However, how can we pass parameters into the Scheduled class in a generic manner? One approach, as employed by the mentioned Force DI package, is to address the issue through another layer of dependency injection. In Force DI, instead of specifying the class you intend to construct, you provide the name of a Provider class. This Provider class includes a newInstance(Object params) method that understands the expected parameters and possesses sufficient knowledge about the type you aim to construct, enabling it to set the parameters accurately. (It’s worth noting that the Provider itself could potentially be the class.)

While the Provider method of Force DI is a clever solution, there is an alternative approach. You can simply utilize JSON.deserialize() to directly populate the values, like so:

Type scheduledClassType = Type.forName(thisJob.Apex_Class__c); Schedulable scheduledInstance = (Schedulable)JSON.deserialize

(thisJob.Parameters__c, scheduledClassType);

JSON deserialization in Apex enables actions that are otherwise impossible. It has the capability to circumvent attribute access levels, allowing the setting of attributes during object creation, including writing to private attributes. Additionally, it provides a generic method for writing to attributes. In contrast, if dealing with a Map<String, Object> containing attribute names mapped to values that need to be set on a class, Apex lacks the reflection capability to loop through them for setting all attributes, requiring a line of code for each attribute. JSON deserialization offers a solution to this limitation.

However, there are drawbacks. It bypasses the constructor, negating any processing it might perform while setting attributes. Furthermore, it only works for simple types. Despite these limitations, where applicable, it offers a straightforward solution.

Two additional considerations:

- The Provider approach is not mutually exclusive with JSON deserialization. One could create a Force DI Provider using the JSON method, serving as the default provider for most cases, and develop more intricate Providers where necessary.